Everything You Need to Know about Cache

When hearing the word Cache the first thing that comes into normal people’s mind is money, but what all the IT people hear and understand is the only one a place where computer stores recently used information. The explanation is: a cache is a data storing technique that provides the ability to access data or files at a higher speed.

In computing, active data is often cached to shorten data access times, reduce latency and improve input/output (I/O). Because almost all application workload is dependent upon I/O operations, caching is used to improve application performance. Web browsers such as Internet Explorer, Chrome, Firefox, and Safari use a browser cache to improve performance for frequently accessed web pages.

When you visit a webpage, your browser request files and they are stored on your computing storage in the browser's cache. When you visit a webpage, the files your browser requests are stored on your computing storage.

Photo Credits: MaxCDN

If you click "back" and return to that page, your browser can retrieve most of the files it needs from the cache instead of requesting they all be sent again. This approach is called read cache. It is much faster for your browser to read data from the browser cache than to have to re-read the files from the webpage.

Many of us are interested in why do style changes in the websites are not visible immediately. Why is it asked to delete hoard from the browser? Why do we need cache at all? And how to solve this problem? The answer is that firstly cache is not a problem or an issue or a bug that many of “non-IT” people think. A cache is an extremely important part of nowadays computers. It is actually a solution to a problem of maximizing speed.

If the browsers do not have a cache the websites will be extremely slow. The problem is that depending on server configuration, files are kept in local memory for several days. In order to avoid this issue, programmers should use versioning of files. In that case, all style changes made on your website will be visible immediately.

What is Cache Algorithms?

Cache algorithms provide instructions for how the cache should be maintained. Some examples of cache algorithms include:

Least Frequently Used (LFU) is a type of cache algorithm used to manage memory within a computer. The standard characteristics of this method involve the system keeping track of the number of times a block is referenced in memory. When the cache is full and requires more room the system will purge the item with the lowest reference frequency.

Least Recently Used (LRU) discards the least recently used items first. This algorithm requires keeping track of what was used when which is expensive if one wants to make sure the algorithm always discards the least recently used item.

General implementations of this technique require keeping "age bits" for cache-lines and track the "Least Recently Used" cache-line based on age-bits. In such an implementation, every time a cache line is used, the age of all other cache-lines changes.

Most Recently Used (MRU) discards the most recently used items first. In findings presented at the 11th VLDB conference, Chou and DeWitt noted that "When a file is being repeatedly scanned in a reference pattern, MRU is the best replacement algorithm.

"Subsequently, other researchers presenting at the 22nd VLDB conference noted that for random access patterns and repeated scans over large datasets (sometimes known as cyclic access patterns) MRU cache algorithms have more hits than LRU due to their tendency to retain older data. MRU algorithms are most useful in situations where the older an item is, the more likely it is to be accessed.

Types of Cache

Write-around cache allows write operations to be written to storage, skipping the cache altogether. This keeps the cache from becoming flooded when large amounts of write I/O occur. The disadvantage is that data is not cached unless it is read from storage. As such, the initial read operation will be comparatively slow because the data has not yet been cached.

You are just one step back to level up your business

Write-through cache writes data to both the cache and storage. The advantage of this approach is that newly written data is always cached, thereby allowing the data to be read quickly. A drawback is that write operations are not considered to be complete until the data is written to both the cache and primary storage. This causes write-through caching to introduce latency to write operations.

Write-back cache is similar to write-through caching in that all write operations are directed to the cache. The difference is that once the data is cached, the write operation is considered complete. The data is later copied from the cache to storage. In this approach, there is low latency for both read and write operations. The disadvantage is that, depending on the caching mechanism used, the data may be vulnerable to lose until it is committed to storage.

Popular Caches

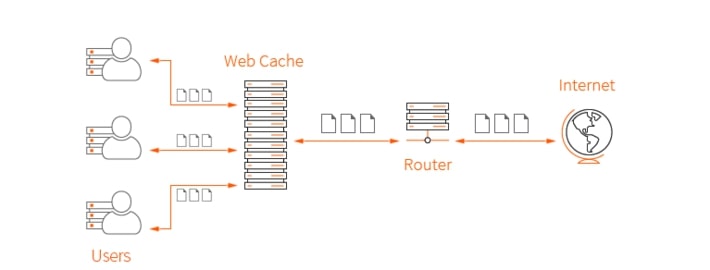

Cache Server

A dedicated network server, or service acting as a server, that saves web pages or other Internet content locally. By storing previously and frequently requested information, bandwidth is saved and browsing speeds for already cached websites become faster since they are served up locally as opposed to data still traveling from across the globe.

Disk Cache

A disk cache is a cache memory that is used to speed up the process of storing and accessing data from the host hard disk. It enables faster processing of reading/writing, commands, and other input and output process between the hard disk, the memory and computing components

Cache Memory

Cache memory is a small-sized type of computer memory that provides high-speed data access to a processor and stores frequently used computer programs, applications, and data. It is the fastest memory in a computer, and is typically integrated onto the motherboard and directly embedded in the processor or main random access memory (RAM). Random access memory (RAM) that a computer microprocessor can access more quickly than it can access regular RAM. Although a RAM cache is much faster than a disk-based cache, the cache memory is much faster than a RAM cache because of its proximity to the CPU.

Flash Cache

Temporary storage of data on NAND flashes memory chips - often in the form of solid-state drive (SSD) storage -- to enable requests for data to be fulfilled with greater speed than would be possible if the cache were located on a traditional hard disk drive (HDD).

How to Increase Cache Memory

Cache memory is a part of the CPU complex and is therefore either included on the CPU itself or is embedded into a chip on the system board. Typically, the only way to increase cache memory is to install a next-generation system board and a corresponding next-generation CPU. Some older system boards included vacant slots that could be used to increase the cache memory capacity, but most newer system boards do not include such an option.

How to Clear Cache in Different Browsers

Avoiding Spam Filters When Using Mailgun

Easy Guide to Check Website Quality

Автор։

Lusine Mkhitaryan

Опубликована։

Январь 12, 2018

Последное обновление։

Декабрь 30, 2020